The WaPo published a sad, frightening opinion in artist-drawn pictures about what it is like to moderate social media content. The opinion is one of a WaPo picture series called Shifts, an illustrated history of the future of work:

‘I quit my job as a content moderator.

I can never go back to who I was before.’

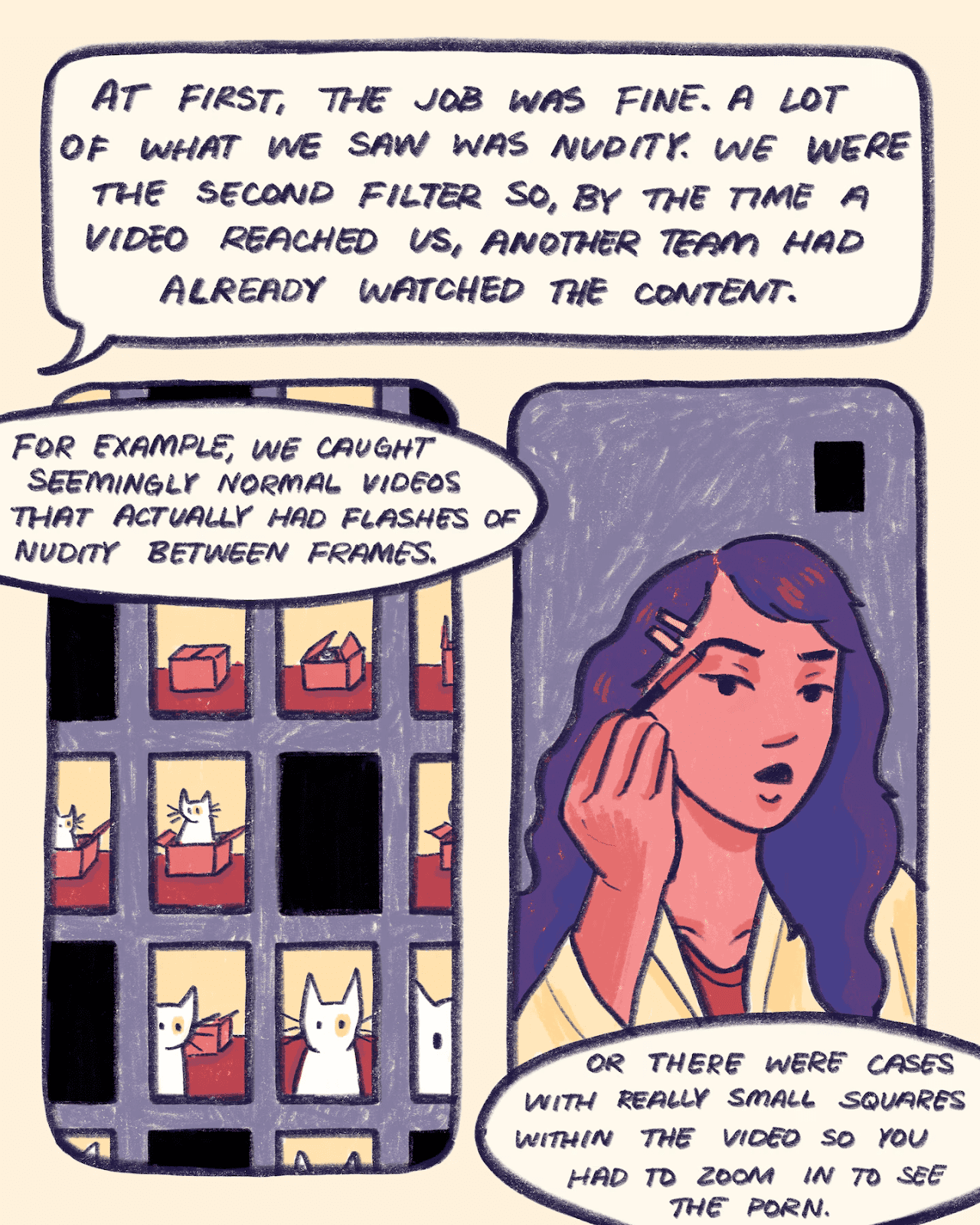

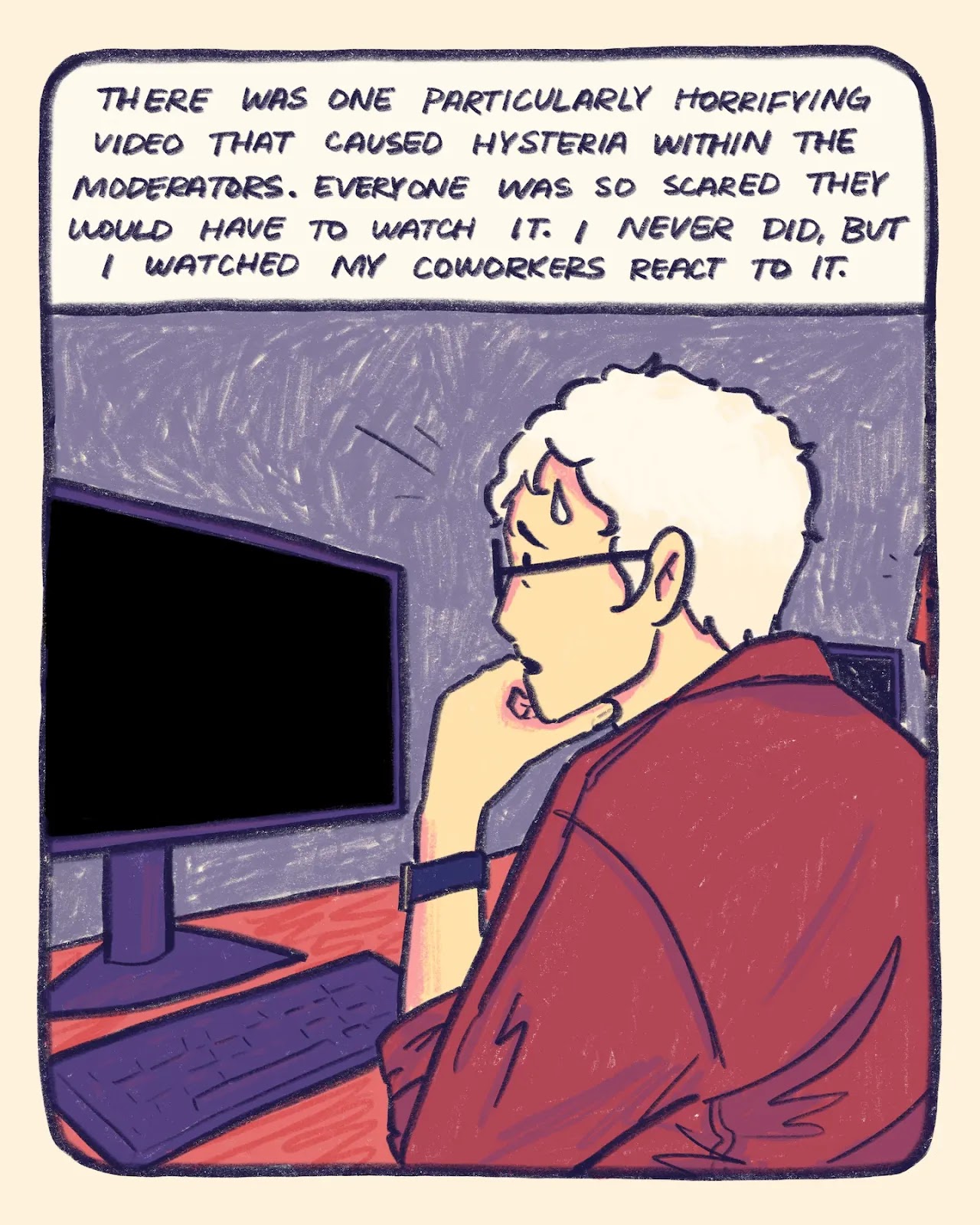

Alberto Cuadra worked as a content moderator at a video-streaming platform for just under a year, but he saw things he’ll never forget. He watched videos about murders and suicides, animal abuse, child abuse, sexual violence and teenage bullying — all so you didn’t have to. What shows up when you scroll through social media has been filtered through an army of tens of thousands of content moderators, who protect us at the risk of their own mental health.

Although Perplexity makes it sound like content moderation is a huge expenditure to businesses, one of its search results indicates that Facebook spends a paltry ~1.5% of revenues on content moderation. Content reviewers are often provided by third-party contractors. Content moderation involves nuanced decisions, especially for issues like hate speech or misinformation. Unfortunately that can't be fully automated, at least not yet. So, human moderators take mental damage from the filth and shocking cruelty that some immoral or evil people post online.

Well, that's just how exuberant American markets running free wild and butt naked do things. Perplexity, and at least some radical anti-regulations economists/plutocrats, say they spend huge amounts on content moderation. So what is a high, medium or low business cost is in the beholder's eye, right?

Some interesting reader comments about the WaPo opinion piece to consider:

1. What is happening to this content moderator is called secondary trauma and it is serious. Anyone who works with trauma victims is vulnerable to it, including myself as a Child Protective Services social worker.2. Nothing brings out the dark side of human behavior quite like anonymity. So much evil is caught and punished only because the bad behavior was witnessed. Even then, people will claim innocence. But be forewarned, every video of animal abuse, specifically, indicates a future human abuser / killer. That's a historically consistent connection. [I wonder if that is true]3. I am generally opposed to AI, but this is one case where the use of a tool that would identify and report any abusive videos or links to them is beneficial. Human memory and exposure to violence and abuse attacks the psychological system and all bodily systems in a detrimental manner. Computers are clearly the answer in this situation.

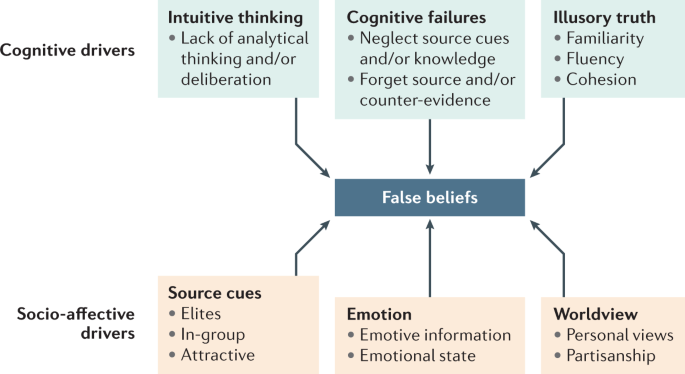

Another point to consider: The discussion above ignores toxic social media content that has helped poison and radicalize much of American politics. That is an entirely different kind of immoral/evil content.

Q: What, if anything at all, should be done about people posting brutal filth and evil online, e.g., (i) get rid of anonymity, (ii) force social media companies to spend more on content moderation and research on how to prevent and treat trauma, (iii) force social media companies to find ways to make AI do all or essentially all of the content moderation, (iv) something else, (v) some combination of all the above, (vi) nothing at all because our exuberant, butt naked free markets are handling the problem beautifully, or (vii) completely eliminate all content moderation and let millions of voices spew the beauty of 100% unrestrained free speech as some free speech absolutists want?

__________________________________________________________________

Un-numbered footnote:

A personal anecdote to consider if you are not yet in TL/DR mode:

In law school, I took two semesters of family law and one of criminal law. That was all in 1 year. At the end of that year, I developed significant insomnia. It took several years for it to go away. I attributed my insomnia to the nightmares that morphed out of various horrific court cases and decisions we had to study and internalize to some extent. Some of the cruelty and sheer savagery from some of what we had to learn was traumatic to me. In particular, the savagery in family law was shocking. Some parents heartlessly used their kids as tools of war between themselves. Child wreckage was all over the place. Some parent were simply vicious monsters who enjoyed literal physical and/or mental torture of their children, some as young as 2 or 3. Sometimes the kids got murdered, some got starved, some got repeatedly beat up, and a few got all three.

I recall a guest speaker in two class sessions, a prominent San Francisco appeals court attorney who represented women in divorces in wealthy families. He had to carry a concealed, licensed gun to protect himself from enraged rich husbands and the thugs they sometimes hired to do "mischief." The husbands who failed to hide their wealth from the forensic accountants the attorney hired usually wound up extremely pissed off. They had to pay a lot more than they wanted to pay in the divorce settlement. That enraged some of them and they threatened to kill the wife's attorney and/or the wife. Most rich husbands in this attorney's line of work took serious measures to hide wealth any way they could think of. Description of real life murders were involved in those class sessions. I could go on and on and on about horrors like this, including other speakers with equally horrific stories of savagery and brutality. But cap off this anecdote, after taking those three classes, I firmly decided I would never, ever go into criminal law, or especially the even more horrific family law.

Note that I did not watch videos of savagery and slaughter that social media content moderators have to watch. I experienced horrors and sadness only by reading about then and hearing about them in lectures. That's a softer form of exposure. The intensity of my exposure to mayhem and horrors was less than content moderators, but it still really got to me.